Insight

How Can Vision AI Recognize What It Has Never Seen Before? LVIS and the Future of Object Detection

Hyun Kim

Co-Founder & CEO | 2025/08/25 | 15 min read

How Can Vision AI Recognize What It Has Never Seen Before? LVIS and the Future of Object Detection

Many companies that introduce AI to their industrial sites are often surprised by how inflexible it can be. When unpredictable defects occur on the production line or new products are introduced, they often find themselves needing to label massive amounts of new data and retrain their models from scratch—incurring significant time and cost. As a result, many ambitious AI initiatives stall or are eventually abandoned.

But what if AI could instantly recognize and classify a new component it has never seen before, an unexpected contaminant, or even a subtle quality defect—with just a single explanation or a handful of examples? This would create new opportunities to maximize operational efficiency and respond quickly to changing market conditions.

In this post, we introduce the key technologies that are making this a reality, along with the essential dataset used to validate their performance: LVIS. As AI evolves from mere memorization to true adaptation, we’ll explore the tangible value this shift brings to real-world industrial applications.

1. Why We Needed a Dataset That Mirrors the Real World: The Philosophy Behind LVIS

The evolution of computer vision technology has always gone hand-in-hand with the advancement of datasets. From MNIST (handwritten digits) to ImageNet (1,000 common object classes) to COCO (80 classes for object detection and segmentation), datasets have played a central role in improving AI model performance. However, traditional datasets have shared a common limitation: they were built for controlled environments.

These datasets consist of a limited number of classes, each with a relatively balanced number of examples—making it difficult for models to learn the full complexity of the real world. For instance, a model trained on the COCO dataset might learn to detect “person” and “bicycle” with equal weight. But in real industrial settings, specific parts or equipment may appear frequently, while unexpected contaminants or certain abnormal signs may be extremely rare.

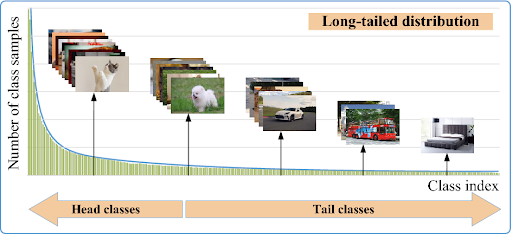

This stark imbalance often observed in real-world settings—where a few objects appear frequently while a vast number of others appear rarely—is known as the “long-tail distribution.” Traditional models have struggled with this “long tail,” primarily because they never had the opportunity to learn these rarely occurring objects, limiting their effectiveness in real-world deployments.

Example of long-tail distribution

To address this gap, Facebook AI Research (FAIR) released the LVIS (Large Vocabulary Instance Segmentation) dataset. Designed primarily as a research benchmark for advancing computer vision, LVIS offers a large-scale, fine-grained annotation dataset at the vocabulary level.

How LVIS Differs from Traditional Datasets

Unmatched Scale and Diversity: LVIS contains over 1.6 million images and more than 2 million instance annotations. With more than 1,203 object categories—far surpassing COCO’s 80—it offers a vastly richer vocabulary. This encourages models to learn not only different types of objects but also hierarchical relationships between them, fostering the ability to recognize more fine-grained and nuanced distinctions.

Intentionally Designed Imbalance: LVIS is intentionally constructed to mimic the real-world long-tail distribution. Each category is explicitly tagged based on its frequency of appearance—either frequent, common, or rare. This allows researchers to measure not just overall average precision (AP), but also APr—performance on rare object categories, which is especially challenging for new AI models. APr serves as a critical metric for evaluating a model’s true generalization capabilities.

High-Quality Instance Segmentation: Beyond bounding boxes, LVIS provides pixel-level instance segmentation data that precisely outlines object boundaries. This significantly raises the bar for model performance, as it requires a deeper understanding of object shapes—an essential capability for high-precision inspection and analysis in industrial environments.

In short, LVIS takes AI models out of the comfort zone of controlled environments and into the unpredictable “wild” of real-world scenarios, serving as a rigorous yet realistic benchmark for performance validation.

2. A New Paradigm for Measuring True AI Reasoning: Zero-Shot Evaluation

The emergence of realistic datasets like LVIS has fundamentally transformed how we evaluate AI models. The key question is no longer “How well did the model memorize the data?” but rather, “How well can it reason based on various prompts from a dataset it has never seen before?”

This is the essence of zero-shot object detection. In this setting, a model is evaluated on a benchmark without having received any training on that dataset—like a student taking a test without ever having read the textbook, guided only by a few hints.

Recent studies use 3 prompting protocols to evaluate a model’s reasoning ability under different prompting strategies in this zero-shot setup.

Dissecting the Evaluation Protocols: 3 Prompting Methods

1. Text Protocol: Language-Based Prompting

What it measures: The model’s ability to perform zero-shot object detection using text prompts of all category names from the benchmark

How it works: When given category names such as “person,” “car,” or “cat” as text prompts, the model detects the corresponding objects in the image. This is the same approach used in standard open-vocabulary object detection settings.

Practical significance: This is the most intuitive and scalable method. New categories can be added simply by providing their text names, enabling virtually unlimited expansion.

2. Visual-G (Generic): Generalized Visual Prompting

What it measures: The model’s ability to perform zero-shot object detection using pre-computed average visual embeddings as prompts

How it works:

- Preparation: Randomly sample a certain number (“N”) of images (default: 16) per category from each benchmark’s training set

- Embedding extraction: Extract N visual embeddings using the ground truth (GT) bounding box of each image

- Averaging: Compute an average from N embeddings to create a representative embedding for each category (e.g., 80 for COCO)

- Inference: Detect regions in the test image that are most similar to the averaged embeddings

Practical significance: This is effective for detecting objects that are hard to describe with text alone, such as those with unique textures, shapes, or color patterns across various clusters.

3. Visual-I (Interactive): Interactive Visual Prompting

What it measures: The model’s ability to detect other instances of the same category using a ground truth (GT) box within the test image as a visual prompt

How it works:

- Assume the test image contains a certain number (“M”) of categories

- Randomly select one GT box per category (or convert it to a center point)

- Use this as the visual prompt to detect other instances of the same category in the image

Practical significance: While relatively simpler than Visual-G, this method supports a wide range of applications such as annotation automation and object counting. The user only needs to point to one object, and the model automatically finds the rest—making it a very practical approach.

Why All 3 Prompting Methods Matter

Each protocol measures a different aspect of AI reasoning:

- Text: Conceptual reasoning through language-visual alignment

- Visual-G: Pattern matching using generalized visual prototypes

- Visual-I: Interactive reasoning based on immediate visual similarity

In real industrial environments, all three reasoning capabilities are essential. Depending on the situation and nature of the data, the most effective prompting method can be selected and applied.

3. Next-Generation AI Technologies Transforming Industries

The LVIS dataset and various evaluation methods such as Text, Visual-G, and Visual-I serve as key milestones that reflect where AI technology is headed—and how it will shape the broader industrial landscape.

Revolutionizing the Cost of Dataset Construction: There is no longer a need to create tens of thousands of labeled images for every new object. With just a simple text description, a few visual examples, or even a user’s intuitive prompt, AI can now perform new tasks instantly, drastically reducing both the time and cost of building datasets.

Enabling Site-Specific AI Systems: AI is evolving from a static command executor to an intelligent partner that can instantly adapt to on-site situations. Through natural language instructions, visual cues, or intuitive user interaction, AI can now instantly understand and execute complex tasks on demand.

Greater Flexibility in Unpredictable Situations: In fields like manufacturing, logistics, and safety management, unpredictable scenarios occur frequently. However, these next-gen AI methodologies and technologies provide a foundation for flexible adaptation without prior training—allowing for real-time recognition and response to unexpected contaminants, equipment failures, or newly introduced products.

Conclusion

We are entering a new era of AI—one where models are no longer rigid systems that can only handle pre-defined tasks, but dynamic agents capable of adapting to new and unforeseen situations. Tested against the real-world complexities introduced by LVIS, today’s AI demonstrates not just memorization, but the ability to learn and adapt instantly through language, vision, and interaction.

The Text, Visual-G, and Visual-I protocols offer an objective framework for evaluating these multidimensional reasoning capabilities and serve as essential guidelines for developing AI systems that are best suited for real-world industrial use.

The evolution of vision AI is solving long-standing inefficiencies in industrial environments, improving resilience to unpredictable events, and ultimately unlocking higher productivity and competitiveness for enterprises.

Superb AI will soon unveil a project that showcases this innovative ability to “understand the unseen” through various types of prompts. Stay tuned as we unlock the full potential of AI to transform industrial operations!

Related Posts

![[Physical AI Series 4] A Strategy for Successful Physical AI Adoption: A 4-Step Execution Roadmap to Maximize ROI](https://cdn.sanity.io/images/31qskqlc/production/0768d35871b72179ee4901195cd5d4bb1a582340-2000x1125.png?fit=max&auto=format)

Insight

[Physical AI Series 4] A Strategy for Successful Physical AI Adoption: A 4-Step Execution Roadmap to Maximize ROI

Hyun Kim

Co-Founder & CEO | 18 min read

![[Physical AI Series 3] The Brain of Physical AI: Robot Foundation Models and Data Strategy](https://cdn.sanity.io/images/31qskqlc/production/d7e66e5540113ef407d54fe6013920ef0cbffd6e-2000x1125.png?fit=max&auto=format)

Insight

[Physical AI Series 3] The Brain of Physical AI: Robot Foundation Models and Data Strategy

Hyun Kim

Co-Founder & CEO | 7 min read

Insight

Physical AI — From How Robots Learn to Big Tech Moves and Data Strategy

Hyun Kim

Co-Founder & CEO | 15 min read

About Superb AI

Superb AI is an enterprise-level training data platform that is reinventing the way ML teams manage and deliver training data within organizations. Launched in 2018, the Superb AI Suite provides a unique blend of automation, collaboration and plug-and-play modularity, helping teams drastically reduce the time it takes to prepare high quality training datasets. If you want to experience the transformation, sign up for free today.