Insight

[Physical AI Series 3] The Brain of Physical AI: Robot Foundation Models and Data Strategy

Hyun Kim

Co-Founder & CEO | 2026/02/19 | 7 min read

![[Physical AI Series 3] The Brain of Physical AI: What Is a Robot Foundation Model?](https://cdn.sanity.io/images/31qskqlc/production/d7e66e5540113ef407d54fe6013920ef0cbffd6e-2000x1125.png?fit=max&auto=format)

From “Automation” to “Autonomy”: The GPR Era Is Coming

In Physical AI Series 1 and 2, we saw the beginning of a new era—one where AI gains a physical body and starts interacting with the real world. Now, Physical AI is on the verge of another major leap. We are moving beyond the era of “automation,” where robots repeat a single predefined task, and entering the era of “autonomy,” where robots flexibly carry out multiple missions across diverse environments. At the center of this shift is the GPR (General-Purpose Robot).

This wave of change is no longer driven by technical curiosity alone—it is being validated by the movement of capital. According to a J.P. Morgan report, investment in U.S. robotics startups surged from $7 billion in 2020 to more than $12 billion in 2024, with industrial automation leading the funding momentum. In China, PaXini Tech (a competitor to EngineAI) raised approximately $140 million, further signaling the market’s growing expectations.

But the key to this transformation is no longer hardware sophistication alone. The real engine behind this next leap is the robot’s AI-driven “brain.” In Series 3, we take a deep dive into the latest technology trends powering GPRs—and the practical data strategies that will determine who wins in this new paradigm.

What Makes a GPR “Think”? (Sense–Think–Act)

A GPR can be understood as a continuous three-step loop: Sense (perception) → Think (reasoning) → Act (execution). The fundamental difference between traditional robots and GPRs lies in the dramatic advancement of the “Thinking” capability.

- Sense (Perception): Advanced sensors—such as 3D vision and tactile sensors—collect environmental data with high precision, much like human senses. The richer the robot’s perception of the world, the higher the quality of decisions its “brain” can make.

- Act (Execution): More capable actuators, including robot arms and grippers, precisely carry out the outcomes of “thinking” in the physical world. A recent video released by Figure AI, showing its humanoid robot F.02 naturally performing household tasks such as folding laundry, is a representative example of how far refined “Act” capabilities have progressed.

- Think (Reasoning): Robot foundation models (Foundation Models for Robotics) serve as the brain of GPRs—bringing an entirely new level of intelligence through AI.

- Understanding natural language commands: Robots can interpret complex, abstract human instructions—such as “Pick up the blue cup on the table and place it in the sink”—and plan them into executable subtasks.

- Situation reasoning and generalization: Even when encountering unfamiliar objects or unexpected obstacles, robots can infer the most reasonable action based on the vast data they have learned. This means you no longer need to hand-code countless “what-if” scenarios. This impressive dexterity demonstrated by Figure AI’s F.02 was made possible by Helix, a Vision-Language-Action (VLA) model. Another good example of the rise of robot foundation models is the newly introduced ‘Skild Brain’ by Skild AI. The company is attempting to teach robots physical-world common sense by combining large-scale simulation data with internet video data.(Reference: Zero-shot and open-world—https://superb-ai.com/en/resources/blog/zero)

- Sim-to-Real (simulation-based learning): Robots learn skills safely and quickly through tens of thousands of trial-and-error iterations in virtual simulation environments. They then transfer those learned capabilities into the real world—advancing AI models without physical constraints or real-world risk.

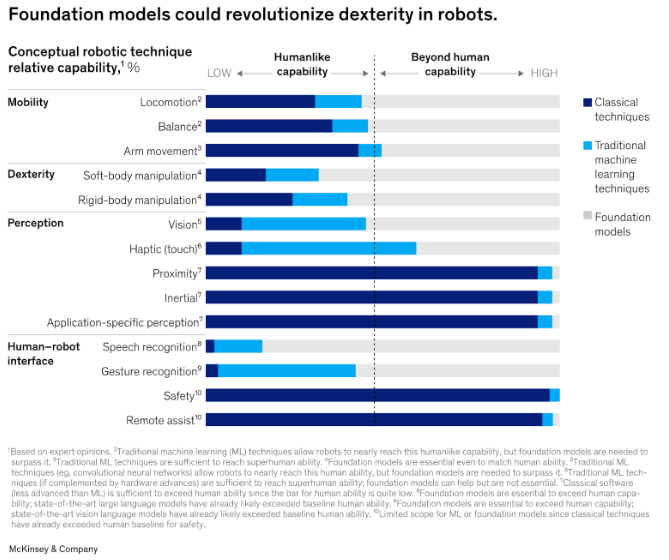

(Potential of foundation models to transform robotic capabilities. Source: McKinsey)

New Data Requirements for “Thinking Robots”

The new paradigm of GPRs and AI unlocks enormous potential—but it also introduces data challenges on a completely different level. To build successful AI robots, we must overcome the following data bottlenecks.

Challenge 1: The Complexity of Multi-Modal Data

For a GPR to understand and act in the world like a human, simple 2D images are not enough. Imagine teaching a robot how to make coffee. You would need to collect and label, in an integrated way: (1) video footage of the coffee machine, (2) data capturing the machine’s 3D shape, (3) tactile sensor data recording force and pressure when handling a cup, (4) a natural language instruction such as “Make me a latte,” and (5) whether each action succeeds or fails. The first major hurdle is figuring out how to manage these heterogeneous “modes” of data efficiently within a single workflow.

Challenge 2: A Widening Sim-to-Real Gap

Simulation is essential for training GPRs—but virtual environments inevitably differ from reality. The real world contains infinite variables: subtle shifts in lighting, the true texture of objects, unexpected friction, and more. This sim-to-real gap becomes one of the biggest hurdles to commercializing GPRs—and even industry leaders are not immune. Recent reports that Tesla has struggled to meet its humanoid robot production targets, and that its robotaxi business has faced regulatory barriers in California, underscore how difficult this problem is. No matter how advanced the technology, it can fail when confronted with unpredictable real-world data.

The core question, then, is this: to close the gap, what real-world data should you select—how much of it, and in what way—to fine-tune models trained in simulation? This is a decisive challenge that ultimately determines their real-world performance.

Superb AI: A Partner That Provides Both the “Brain” and the “Fuel” for GPR

The data challenges outlined above are major barriers to successful GPR development. Superb AI’s data-centric MLOps platform is built to solve these complexities—serving as a powerful “data engine” that awakens the GPR’s “brain.” In addition, Superb AI lowers the barrier to launching robotics projects with ZERO, an industry-tailored vision foundation model.

- Solution 1: Unified Multi-Modal Data Management

Superb AI provides an environment where you can centrally manage and label every data type required for GPR training—images, video, 3D sensor data, text, sensor logs, and more—within a single platform. This eliminates the inefficiencies caused by using separate tools for different data types, and enables consistent lifecycle management of Physical AI data—helping AI develop a more holistic understanding of the world. - Solution 2: Intelligent Data Curation to Reduce the Sim-to-Real Gap

Beyond simply managing synthetic data generated in simulation alongside real-world data, Superb AI uses AI-based data curation to intelligently identify the most effective real-world data for closing the sim-to-real gap. For example, if a simulation-trained model repeatedly fails in “low-light environments” or on “reflective surfaces,” our platform can proactively surface real-world data under those exact conditions—then raise its labeling and training priority. The result is a set of precisely selected, high-quality data that can be used to fine-tune custom AI models for real-world performance.

- Solution 3: ZERO — A Lightweight, Fast Starting Point

ZERO is an industry-tailored vision foundation model built on zero-shot technology, enabling instant detection of new object types without requiring additional retraining. By understanding natural language commands and reasoning through context, ZERO helps robots perceive and judge objects they have never encountered before. If you want to start a general-purpose robotics project quickly and efficiently, start with ZERO.

In the GPR Era, Competition Means a Software Race

The rise of general-purpose robots (GPRs) is no longer a distant future—it is becoming a real technology reshaping the industrial landscape today. As new players such as China’s Fourier Robotics continue to emerge, the global race is accelerating.

In this era of relentless competition, the key to advantage is software. The true winners will not be the companies building the most advanced hardware, but the ones that can develop and continuously upgrade the most intelligent software—the “brain”—at the fastest pace. To do that, you need both: a powerful robot foundation model as the “brain,” and a high-performance data engine as the “fuel” that supports it. If either one is missing, you cannot stay ahead in the GPR race.

If you’re exploring a general-purpose robotics project, Superb AI can help you move faster than anyone else. Fill out the information below, and a Superb AI expert will reach out shortly.

Related Posts

Insight

Physical AI — From How Robots Learn to Big Tech Moves and Data Strategy

Hyun Kim

Co-Founder & CEO | 15 min read

Insight

Superb AI SOP Monitoring—Setting the New Standard for Quality and Productivity in the Era of Physical AI

Hyun Kim

Co-Founder & CEO | 7 min read

![[Physical AI Series 1] Jensen Huang Declares “Physical AI” the Next Wave of AI—What Is It?](https://cdn.sanity.io/images/31qskqlc/production/980aaf5d759f59fcbbc7bdd7752c9c89c6b3e5a5-2000x1125.png?fit=max&auto=format)

Insight

[Physical AI Series 1] Jensen Huang Declares “Physical AI” the Next Wave of AI—What Is It?

Hyun Kim

Co-Founder & CEO | 9 min read

About Superb AI

Superb AI is an enterprise-level training data platform that is reinventing the way ML teams manage and deliver training data within organizations. Launched in 2018, the Superb AI Suite provides a unique blend of automation, collaboration and plug-and-play modularity, helping teams drastically reduce the time it takes to prepare high quality training datasets. If you want to experience the transformation, sign up for free today.