Tech

Superb AI Achieves Phase 1 Milestone in the State-Run Proprietary AI Foundation Model Project (1.08M Robot Data)

Hyun Kim

Co-Founder & CEO | 2026/02/06 | 10 min read

Starting in 2025, the AI landscape is rapidly shifting beyond on-screen intelligence toward the era of Physical AI—systems that can interact with the real world. The core of AI is moving from understanding language in a digital environment to understanding the physical world and taking action within it.

But what does it actually take for a robot to understand and carry out a request like, “Can you do the dishes?” Understanding spoken words as text is not enough. A robot must be able to locate a sink in a complex kitchen, distinguish between fragile glassware and heavy cookware, visually judge how much to turn a faucet to produce the right water flow, and physically manipulate objects accordingly.

High-quality vision data—especially data that captures 3D space and depth (RGB-D)—remains scarce worldwide. Even more limited is publicly available data that reflects everyday home environments and living patterns unique to a specific country—in today’s example, Korea.

This is the bottleneck Superb AI is focused on solving—while helping advance Korea’s proprietary foundation models to global standards. Superb AI supplies high-quality training data for Vision-Language-Action (VLA) models, enabling large-scale AI systems to move beyond text, fully understand visual context, and ultimately be deployed on robots that can perform real-world actions.

In this post, we summarize Superb AI’s key outcomes from Phase 1 of the Korean government’s Proprietary AI Foundation Model Project, organized around three questions: what we did, why it matters, and how we did it.

TL;DR — Superb AI’s Key Phase 1 Outcomes

- Collected 1.08 million RGB-D frames (raw data) from 50 Korean home environments

- Converted 300,000 frames into model-ready assets (processed data) for training and evaluation

- Unified scene information required for robot learning into a single dataset through a 17-camera multi-view rig as well as the integration of 3 different views (angles)

1. Capturing 1.08M “Meaningful” Frames from Korean Home Environments

Have you ever seen robot videos on YouTube? Robots cooking in spacious kitchens with large island counters may look impressive—but would they move just as smoothly in compact, corner-style, L-shape kitchens common in Korean apartments, or in crowded utility rooms packed with appliances?

During Phase 1 of the Proprietary AI Foundation Model Project, Superb AI recruited 50 Korean households and built a dataset tailored to real Korean living environments.

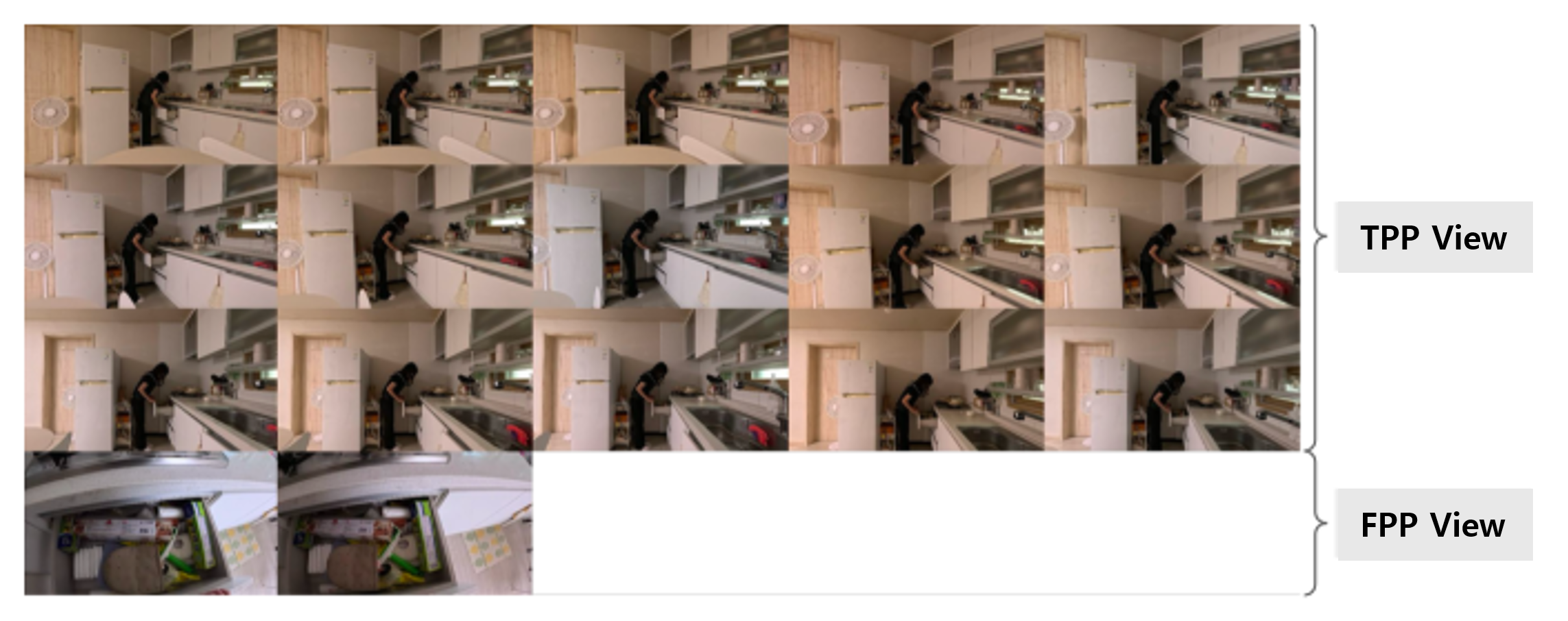

- 🎥 17 cameras, captured simultaneously: This wasn’t just “filming.” To faithfully replicate a robot’s 3D field of view, Superb AI recorded with 17 GoPros synchronized using timecode sync technology. Fifteen cameras captured a third-person view of the entire space, while two cameras were dedicated to the robot’s perspective—capturing both a first-person view and 3D scans that allowed 2D video to be reconstructed into 3D spatial information.

(Example of the camera setup)

(Sample camera setup: Third-person and first-person perspectives)

- 🏠 150 household task scenarios: Superb AI defined 150 household actions a robot would need to perform—such as cooking, washing dishes, folding laundry, and organizing items—and staged approximately 7,500 situations to secure a large-scale dataset.

2. Moving Beyond “Seeing” to “Understanding”

Superb AI’s capabilities go beyond collecting data at scale. The key is processing data in a way that enables AI to reason about the world.

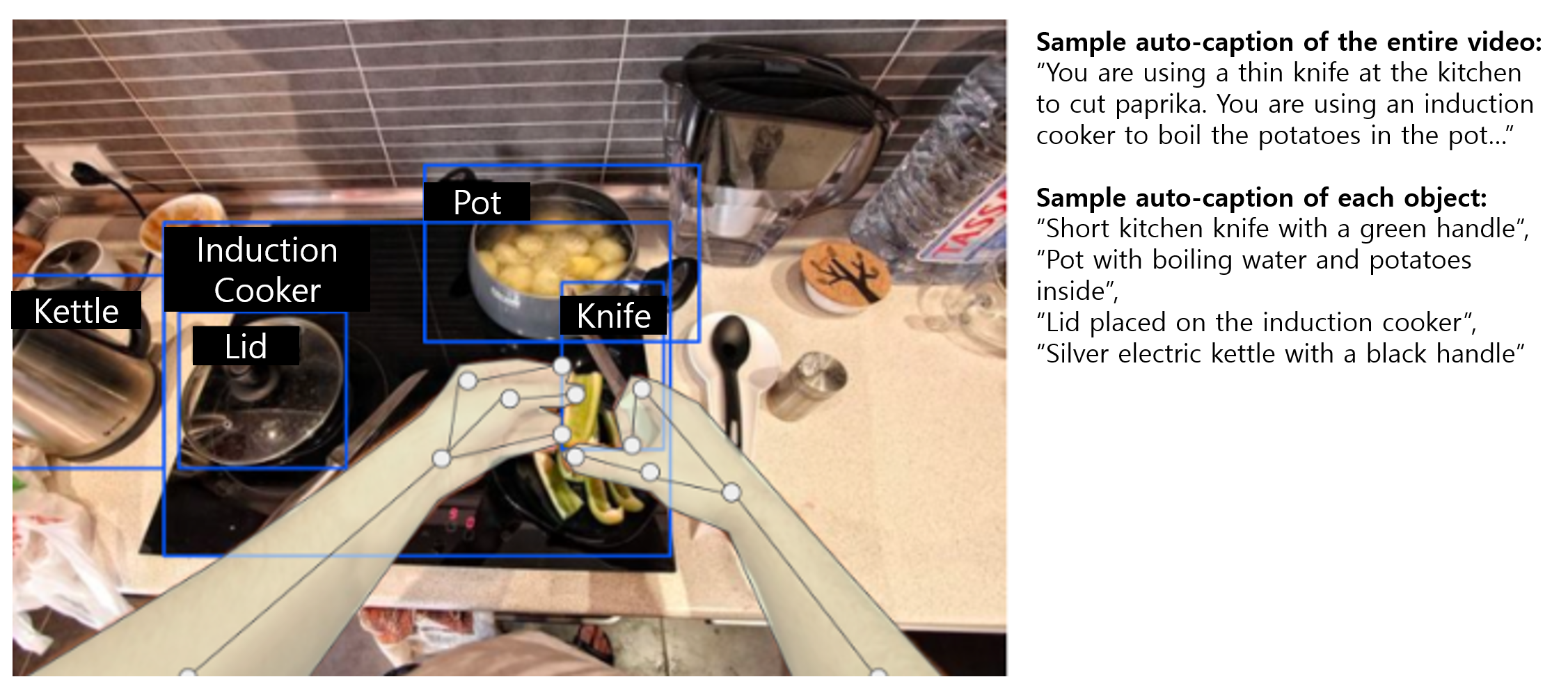

Visual Reasoning

Rather than attaching simple descriptions like “a person picked up a cup,” Superb AI created detailed captions that include context and causality—for example: “To drink water (intent), the person grasped the handle of a transparent glass cup with his right hand (specific action).” This allows AI to learn not only what happened, but why it happened—ultimately helping it infer human intent.

(Example of Superb AI’s data labeling and captioning)

(Example of binary visualization of a depth map from a first-person view)

Focusing on Two Core Viewpoints

To help robots move naturally in home environments, Superb AI designed its data around two essential perspectives: “eyes for understanding space” and “eyes for manipulation.” In Phase 1, Superb AI focused on building high-quality data specifically for these two viewpoints.

Eyes that see the whole space (3-view integration)

By capturing an entire environment simultaneously from multiple cameras, the dataset enables learning of scene context—such as room layout, movement paths, and relationships between objects. This forms the basis for a robot to understand “what is where.”

Eyes that see my hands (egocentric)

When a robot picks up and moves an object, the full scene is less important than the target object in that moment. Using a first-person stereo view, the dataset helps the model learn fine manipulation information such as distance, angle, and depth between the hand and the object.

When these two viewpoints are combined, a robot can plan actions in a way that preserves overall context while still capturing the precise details required for manipulation.

3. Superb AI’s Differentiated Technical Edge in Physical AI

Superb AI’s Physical AI competitiveness—validated through Phase 1 of the Proprietary AI Foundation Model Project—can be summarized in four key strengths.

Hybrid Data Design

Superb AI strategically combines high-quality studio data captured under controlled conditions with crowdsourced data collected from real homes—where environments are complex, cluttered, and unpredictable. This is what enables robots to perform reliably not only in clean lab settings, but also in real households where children run around and everyday items are left out.

Turning 400M Frames into 300K High-Value Assets

Even a simple calculation shows the scale of robot learning data required. In 50 home environments, 150 actions were performed—and recorded by 15 cameras—adding up to roughly 400 million frames.

But manually reviewing and labeling all of that data is nearly impossible and highly inefficient. This is where Superb AI’s Auto-Curate technology played a critical role.

- Step 1 (400M → 1M): Removed repetitive or static segments with little informational value, and secured 1.08 million frames of raw data with meaningful training value.

- Step 2 (1M → 300K): From the raw data, further selected only the most performance-critical segments—specifically, edge cases and key action scenes where models are most likely to struggle.

As a result, Superb AI distilled roughly 400 million frames down to 300,000 highly refined training assets—the data that truly makes models smarter. This is not just about speeding up workflows. It is a differentiated data pipeline capability that dramatically reduces data-building costs while maximizing training efficiency.

Quality Management

Data quality directly determines AI intelligence. Superb AI applies strict quality control to produce data that is not merely large-scale, but also robotics-valid and reliable.

- Stage 1 (Labelers): Verify adherence to labeling guidelines

- Stage 2 (Expert reviewers): Experienced specialists cross-validate labeling precision and consistency

Security and Trust

Superb AI holds globally recognized security certifications, including ISO 27001 and SOC 2 Type II. All data is collected with rigorous consent procedures, and sensitive information—such as faces—is securely protected through de-identification, including automatic mosaic processing.

What Phase 1 Delivered—and What Comes Next

The core achievement of Superb AI’s Phase 1 work in the Proprietary AI Foundation Model Project was securing high-quality vision data tailored to Korean residential environments—and converting it into assets that can be used for real AI model training. The 1.08 million raw frames and 300,000 processed frames built by Superb AI will serve as foundational infrastructure for domestic robot AI systems to recognize complex indoor spaces with greater precision.

Now, the project is moving beyond “scene understanding” and into a new stage: direct interaction with the physical world. Looking ahead, future technical advancement will follow two major directions:

- Advanced manipulation: Going beyond object recognition to understand physical properties such as handle angles and centers of mass—so robots can grasp and control objects precisely

- Action-based AI: Strengthening multimodal capabilities to convert abstract commands like “I’m thirsty” into concrete task plans—such as “find a cup and pour water”—and execute them

In line with this roadmap, Superb AI plans to significantly expand first-person perspective datasets and language-action paired datasets. Through this, Superb AI aims to build robust Physical AI models that operate reliably across diverse environmental conditions—and to demonstrate Korea’s real competitiveness in AI technology.

Related Posts

Tech

Download our New Technical White Paper for Free: Vision AI Success Guide – Building a Technical Moat with Data-Centric MLOps

Hyun Kim

Co-Founder & CEO | 3 min read

Tech

Why DeepL Is Emerging as an AI Translation Powerhouse

3 min read

Tech

How Reliable Is AI? Breaking Down the Black Box Model Problem

3 min read

About Superb AI

Superb AI is an enterprise-level training data platform that is reinventing the way ML teams manage and deliver training data within organizations. Launched in 2018, the Superb AI Suite provides a unique blend of automation, collaboration and plug-and-play modularity, helping teams drastically reduce the time it takes to prepare high quality training datasets. If you want to experience the transformation, sign up for free today.