Insight

[Physical AI Series 1] Jensen Huang Declares “Physical AI” the Next Wave of AI—What Is It?

Hyun Kim

Co-Founder & CEO | 2026/02/03 | 9 min read

![[Physical AI Series 1] What is Physical AI?](https://cdn.sanity.io/images/31qskqlc/production/980aaf5d759f59fcbbc7bdd7752c9c89c6b3e5a5-2000x1125.png?fit=max&auto=format)

1. Why “Physical AI” Now?

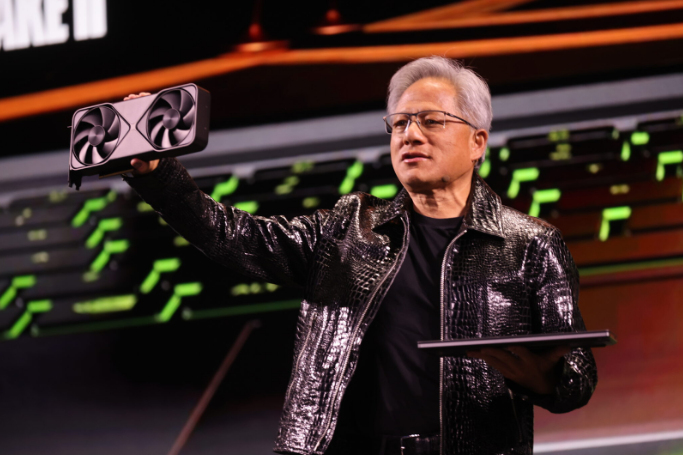

“The next frontier of AI is physical AI. AI is now beginning to understand the laws of physics.”

— Jensen Huang, CEO of NVIDIA (CES 2025 keynote)

Jensen Huang, the CEO of NVIDIA—the company leading the AI revolution—was unequivocal. Beyond generating text and images, AI is now entering the era of Physical AI: systems that understand the real world, interact with it, and perform physical tasks.

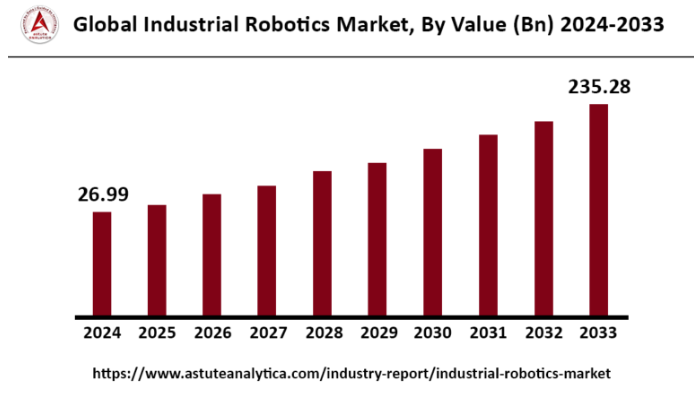

Physical AI is more than a passing tech trend—it is reshaping industrial paradigms. According to an industrial robotics market report by global research firm Astute Analytica, the global industrial robotics market is projected to grow from $26.99 billion (about KRW 37.7 trillion) in 2024 to $235.28 billion (about KRW 328.6 trillion) by 2033.

This explosive growth reflects a clear shift: Physical AI is beginning to solve long-standing challenges in traditional industries such as manufacturing, logistics, and healthcare—while creating entirely new value. In this post, we take a deep dive into everything you need to know: the core concepts of Physical AI, the latest technology trends, real-world industry use cases, and the most important factor for successful adoption.

2. What Is Physical AI?

Definition: AI That Interacts With the Real World

Physical AI refers to AI systems that perceive the physical environment through sensors (cameras, LiDAR, etc.), make decisions based on the information they collect, and act on the real world through actuators—such as robot arms or wheels—creating physical impact.

The Fundamental Difference From Software AI

Conventional software AI—like ChatGPT—can be compared to a “brain in a jar.” It learns from digital data and generates outputs such as text and images, but it cannot directly intervene in the physical world.

Physical AI, by contrast, is a “brain in a body.” It must operate amid real-world complexity and uncertainty—interacting with constantly changing conditions to complete missions. Opening a door, for example, means seeing the shape and material of the handle, deciding how much force to apply, and then acting by turning it with a robot arm. That is Physical AI.

Related Concepts: Embodied AI and Robotic AI

- Embodied AI: Literally “AI with a body,” often used interchangeably with Physical AI. In academia and research, the term is especially common when emphasizing learning through interaction with the environment.

- Robotic AI: Robots equipped with AI—one of the most representative implementations of Physical AI. If Physical AI is the broader concept, Robotic AI is the concrete outcome of that concept embodied in robotic hardware.

3. The History of Physical AI: From Cybernetics to AI Factories

The term “physical AI” itself has only recently been popularized as AI leaders like Jensen Huang began linking today’s AI capabilities with robotics.

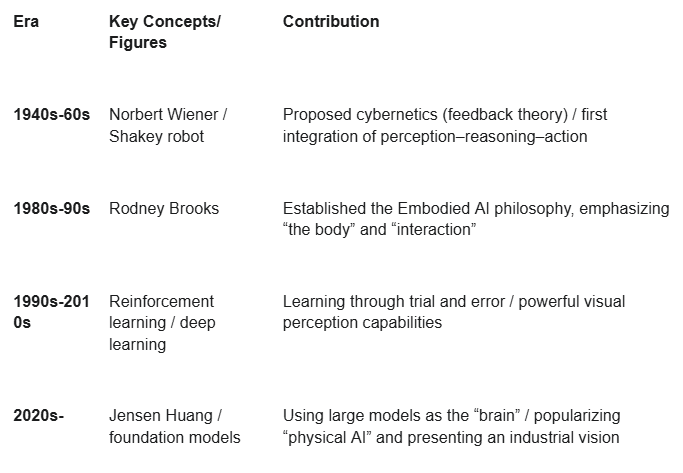

But the deeper idea behind it—intelligence that has a body, interacts with the physical world, and learns through that interaction—has existed since the earliest days of AI research. Its evolution can be broadly traced through four stages.

1. Early Stage (1940s–1960s): Cybernetics and the First “Acting Machines”

The conceptual seed of physical AI can be found in Norbert Wiener’s Cybernetics in the 1940s. Cybernetics explored how living beings and machines regulate themselves and interact with their environments through feedback loops. This aligns directly with physical AI’s core operating principle: environment perception → action → outcome feedback.

By the 1960s, these ideas began taking tangible form:

- The first industrial robot, Unimate (1961): It repeatedly executed a fixed program and replaced human labor on the production lines at General Motors. While it had no “intelligence,” it marked the arrival of a “body” that AI would eventually inhabit.

- The first physical AI, Shakey (1966–1972): Developed at Stanford Research Institute (SRI), “Shakey” is often regarded as the true prototype of physical AI. It perceived its surroundings through cameras and sensors, reasoned to navigate and avoid obstacles while performing tasks, and then acted by moving on wheels. This was the first example in AI research history to integrate perception–reasoning–action into a single system.

(Shakey, Source: SRI)

2. Philosophical Shift (1980s–1990s): “The Body Creates Thought”

In the 1980s, Professor Rodney Brooks at MIT challenged mainstream AI research. He criticized “abstract intelligence” approaches that tried to understand the world through complex symbols and rules—like a “brain in a jar”—and argued that intelligence emerges through a body that collides with the real world.

His Nouvelle AI approach is captured in his well-known paper, Elephants Don't Play Chess, which showed that even without a perfect internal model of the world, interactions among simple behavioral rules can produce complex, intelligent behavior. This reinforced the idea that embodied experience is a prerequisite for intelligence—and became a major philosophical foundation for today’s embodied AI and physical AI.

3. The Evolution of Learning (1990s–2010s): Reinforcement Learning and Deep Learning

For physical AI to become smarter on its own, it needs the ability to learn. During this period, Reinforcement Learning advanced significantly—enabling systems to learn behaviors that maximize rewards through trial and error.

Then, in 2012, Deep Learning triggered a breakthrough in Computer Vision, giving physical AI a powerful set of “eyes.” Robots could finally recognize objects with high accuracy and understand what they were seeing. The combination of learning capability (reinforcement learning) and perception capability (deep learning) laid the groundwork for physical AI to move beyond the lab and begin tackling real-world problems.

4. The Present (2020s–): The Rise of Foundation Models and the Mainstreaming of Physical AI

With the emergence of foundation models powering today’s generative AI, physical AI has reached a historic inflection point. Foundation models give robots a powerful “brain”—enabling them to understand human language, apply commonsense reasoning, and plan complex tasks on their own. Technologies that evolved in parallel for decades—robotics, AI, computer vision, and reinforcement learning—are now converging into one massive wave.

At the Hill & Valley Forum last April, Jensen Huang, CEO of NVIDIA, summarized the past decade of AI progress in three stages:

- Stage 1: Perception AI — Recognizing and understanding information about the world through computer vision (including images, sound, vibration, temperature, and more) (e.g., AlexNet, 2012). Foundation for technologies like autonomous vehicles recognizing lanes and factory automation systems detecting defects

- Stage 2: Generative AI — Understanding meaning and translating or generating information in new forms (e.g., English–French translation, text-to-image generation).

- Stage 3: Reasoning AI — Using reasoning to solve problems and interpret new situations, including creating “agent AI” (digital robots) that can carry out complex work.

Huang argued that the next wave is physical AI—AI that understands physical reasoning, including laws of physics, friction, inertia, and cause-and-effect. Applications of physical reasoning include predicting where a ball will roll, estimating the force needed to grasp an object without damaging it, or inferring that a pedestrian may be behind a car. When these capabilities are applied to robots, robots stop being machines that merely follow fixed commands—and become intelligent entities that can judge and act in the unpredictable real world. This, he emphasized, is a key to addressing the global labor shortage.

In other words, physical AI is not a concept that appeared overnight. It is the inevitable outcome of AI’s evolution—built layer by layer over more than half a century through the philosophies and technologies of countless pioneers.

4. A First Step Toward the Physical AI Era

So far, we’ve explored what physical AI means—and the sweeping journey of how it took shape over more than half a century.

From the theoretical seeds of cybernetics, to Shakey’s historic first steps, to Rodney Brooks’ philosophical shift emphasizing the importance of the body, and finally to the arrival of foundation models as a powerful “brain,” physical AI has become an unstoppable trend—culminating in what Huang called the “next wave of AI.”

Now that we’ve answered the question, “What is physical AI?”, a more important—and more exciting—question remains: “So how will this technology change reality?”

In Part 2, we’ll take a deeper dive into the core technologies that actually drive physical AI (such as computer vision and reinforcement learning), and explore concrete industry use cases and future outlooks across manufacturing, logistics, and healthcare.

Superb AI is a partner for companies preparing for the physical AI era—helping make it easy to bring AI into real-world operations. In your company’s bold challenge ahead, Superb AI’s expertise can become your strongest weapon. If you have questions about adopting physical AI, leave a message below—we’ll reach out right away.

Related Posts

![[Physical AI Series 4] A Strategy for Successful Physical AI Adoption: A 4-Step Execution Roadmap to Maximize ROI](https://cdn.sanity.io/images/31qskqlc/production/0768d35871b72179ee4901195cd5d4bb1a582340-2000x1125.png?fit=max&auto=format)

Insight

[Physical AI Series 4] A Strategy for Successful Physical AI Adoption: A 4-Step Execution Roadmap to Maximize ROI

Hyun Kim

Co-Founder & CEO | 18 min read

![[Physical AI Series 3] The Brain of Physical AI: Robot Foundation Models and Data Strategy](https://cdn.sanity.io/images/31qskqlc/production/d7e66e5540113ef407d54fe6013920ef0cbffd6e-2000x1125.png?fit=max&auto=format)

Insight

[Physical AI Series 3] The Brain of Physical AI: Robot Foundation Models and Data Strategy

Hyun Kim

Co-Founder & CEO | 7 min read

Insight

Physical AI — From How Robots Learn to Big Tech Moves and Data Strategy

Hyun Kim

Co-Founder & CEO | 15 min read

About Superb AI

Superb AI is an enterprise-level training data platform that is reinventing the way ML teams manage and deliver training data within organizations. Launched in 2018, the Superb AI Suite provides a unique blend of automation, collaboration and plug-and-play modularity, helping teams drastically reduce the time it takes to prepare high quality training datasets. If you want to experience the transformation, sign up for free today.